LangGraph: Orchestrating Stateful, Long-Running Agents with Ease

01-07-2025

W.M. Sandeepa Sineth Wickramasinghe

Software Engineer

Building intelligent agents has outgrown simple prompt engineering. Today's practical applications of AI bypass simple chatbot capabilities, requiring context management, human approvals at crucial checkpoints (human-in-the-loop), mixed initiative interfaces, and fault-tolerant workflows. To blend these intricate interactions cohesively, legacy frameworks are wildly inadequate.

LangGraph steps in to fulfill that gap as it is an advanced infrastructure designed for the automation of complex business processes based on resilient, long-lived AI agents. It will architect the sophisticated multi-step reasoning sequences process pipelines that modern production-level AI systems require, with effective failure recovery.

What is LangGraph?

LangGraph is an orchestration framework at a low level, aimed at crafting stateful and long-running AI agents. It was created by LangChain Inc. As the infrastructure layer responsible for agent workflow management, it takes care of state persistence, error recovery, branching logic, and coordination so that you can build intelligent behavior without dealing with complex foundational systems.

LangGraph allows representing agent logic as directed graphs with every node being a computational step, such as LLM calls, tool usage, or human input ("Human in the Loop"), and edges controlling the flow of execution. While integrating tightly with tools, memory, and agents from LangChain Core concepts, LangGraph stays framework agnostic, which means you can use other large language models (LLMs), vector stores, and toolchains freely, allowing for even greater flexibility in building ready-for-production AI systems.

Drawing inspiration from Pregel and Apache Beam while offering a Pythonic interface like NetworkX enables LangGraph to perform computations in parallelly with loops as well as conditionals, thereby enhancing flexibility even further.

Why Use LangGraph for Agents? (Core Benefits)

LangGraph is critical for production-grade applications as it helps overcome some of the most difficult problems associated with reliably deploying long-running AI agents. Here's what sets it apart:

-

Durable Execution: LangGraph provides reliable execution of tasks for running algorithms since failures are inevitable. In a production environment, all processes and workflows undergo strict change management, which includes checkpoints, which means saving work in progress. This ensures that the AI agent can continue to function despite any restarts, timeouts, or permanent shutdowns. These systems are designed to self-heal when broken partway through completion, alongside giving support to system upgrades and scaling seamlessly.

-

Human-in-the-Loop Support: Automation does not mean there will be no longer humans taking care of things. Continuous states saves by the lang chain framework allows composite-ops so that system state remains unchanged while waiting for feedback like reviews or as simple boolean confirmations that form parts of complex things to approve or deny algorithms involving multiple layered automation behind it.

-

Rich, Persistent Memory: Along with tracking ongoing sessions, LangGraph remembers past interactions across time, which supports both short-term and long-term memory. It works with both structured and unstructured data, making it possible to deliver deeply contextual and personalized experiences.

-

LangSmith Integration: Built-in observability tools give you full visibility into agent behavior. Evaluation, tracing decisions, inspecting states, and evaluating performance can all be done with LangSmith, which is vital for debugging, tuning, and deploying with confidence.

-

Enterprise-Ready Infrastructure: Porting LangGraph's prototype features to production level has never been easier, as the system is built for scale, negotiating issues like distributed execution, error handling, load balancing, or monitoring.

Unlike higher-level abstractions, LangGraph gives you full control over your agents' logic while taking care of the complex orchestration behind the scenes. You design the intelligence, LangGraph ensures it runs reliably, safely, and at scale.

The LangGraph Ecosystem

LangGraph is best utilized when integrated into a complete agent development ecosystem, rather than used independently. It can be utilized alone, however, integration with the broader platform provides powerful capabilities.

-

LangSmith serves as your control center for agent development. In addition to logging, it offers visual debugging and step execution tracing alongside comprehensive performance evaluation. Agent optimization becomes effortless with LangSmith, as the process turns into an informed data analysis approach rather than relying on strategies without backing.

-

LangChain Integration brings with it LLM pipelines compatibility, memory usage, and tools utilization, allowing for use in a more streamlined manner. While completely optional, this integration is a natural fit for teams already using LangChain. That said, LangGraph remains fully framework-agnostic, so you can use it with any LLM stack or custom tooling.

-

LangGraph Platform (a paid offering) is ideal for beginners and teams wanting a faster path to production. It features a no-code, UI-based environment, including LangGraph Studio, a drag-and-drop interface for visually designing and testing agent workflows. It also provides tools for deploying agents across dev, staging, and production environments.

These components create a unified ecosystem that is modular and supports prototyping to production. Solo developers and enterprise teams alike can adopt only what they need and scale up as their agent applications expand.

Understanding LangGraph Graphs

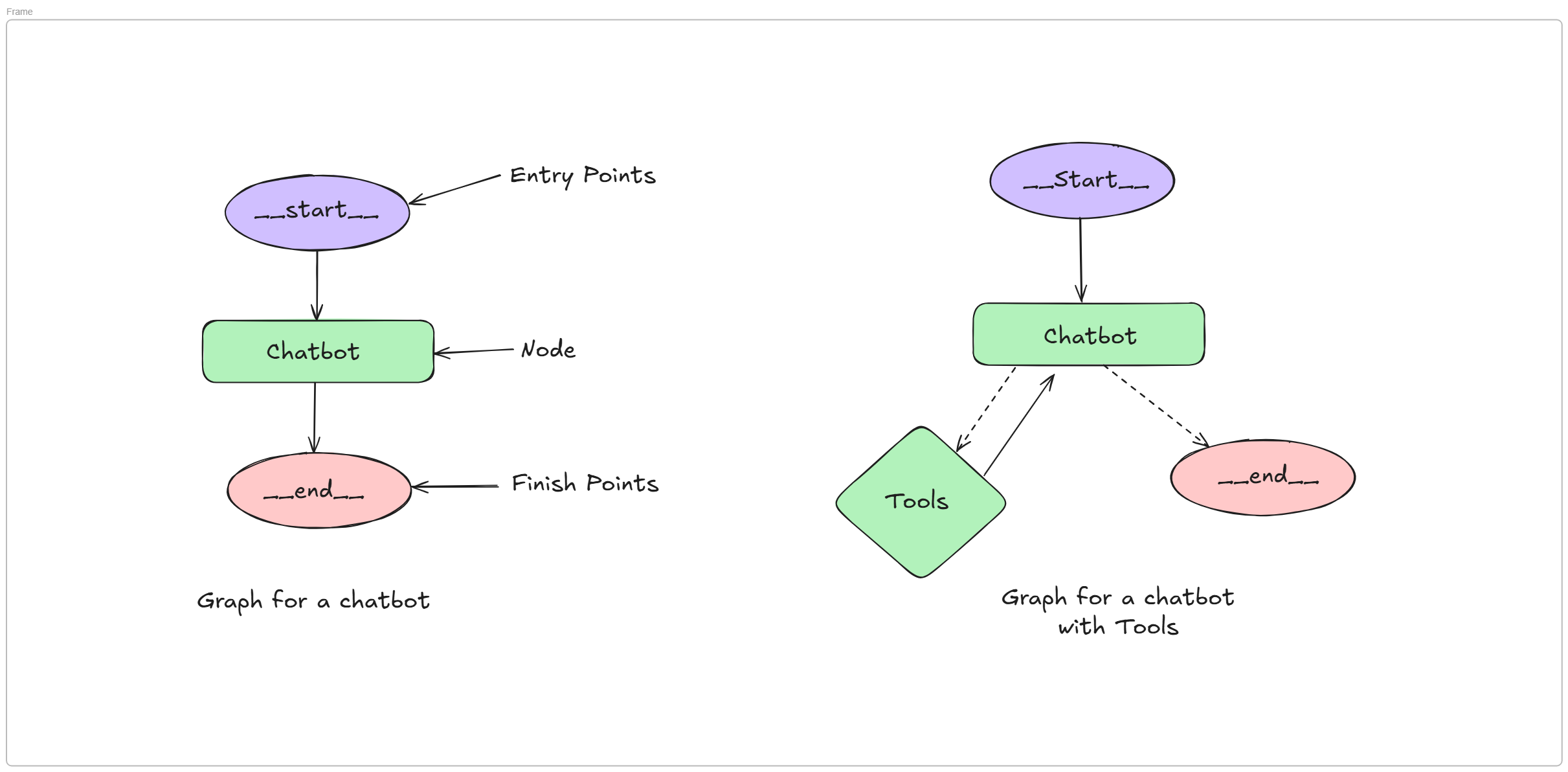

At its core, LangGraph depicts AI workflows as directed graphs, a visual and computational structure where:

- Nodes represent single processing actions (e.g., invoking an LLM, tool usage, or human input).

- Edges indicate how execution is ordered in relation to nodes.

- State describes the shared data that undergoes modification at each node within the graph.

Think of it like a flowchart with boxes (nodes) completing tasks and arrows (edges) illustrating the subsequent action. In contrast, LangGraph graphs are stateful, meaning they retain information as data flows through them.

Key Graph Concepts

- Entry Points: Starting point for execution (we used START).

- Finish Points: Where the graph stops (the "chatbot" node acts both as a node processor and terminal).

- Conditional Logic: Restrictions can be applied to edges, which determine what action to take based on prevailing conditions.

- Loops: Graphs have the ability to return to previous nodes; this allows for iterative procedures.

This graph-based approach makes complex AI workflows visual, debuggable, and maintainable; you can see exactly how data flows and where decisions are made.Example Graph Structures

Getting Started with LangGraph: Creating a Kid-Friendly Science Chatbot (CLI version)

In this guide, you'll build a simple yet powerful science chatbot using LangGraph and Google's Gemini model. This agent is designed for kids under 15. This bot will offer fun, easy-to-understand science explanations right from your terminal. You'll learn how to create a conversational AI with memory and personality, all through Python.Prerequisites

- Python is installed on your system

- A free Google Gemini API Key (get it via Google AI Studio)

Step 1: Project Setup

Install required libraries:

bash

pip install -U langgraph langchain-google-genai python-dotenv

In your project folder, create a .env file and add:

GOOGLE_API_KEY="YOUR_API_KEY_HERE"

Step 2: Define the Chatbot's Memory (State)

Create main.py and define the conversation state:

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph.message import add_messages

class State(TypedDict):

messages: Annotated[list, add_messages]

Step 3: Set Up the Brain (LLM) and Prompt

Add Gemini integration and create the chatbot's personality:

import os

from dotenv import load_dotenv

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_google_genai import ChatGoogleGenerativeAI

load_dotenv()

llm = ChatGoogleGenerativeAI(model="gemini-1.5-flash")

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a friendly and fun science chatbot for kids. "

"Explain concepts simply, use fun analogies, and always be encouraging. "

"Keep your answers short and easy to understand.",

),

MessagesPlaceholder(variable_name="messages"),

]

)

chain = prompt | llm

Step 4: Build the Graph Logic

Create the chatbot's thinking process and memory-aware flow:

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph, START

def chatbot_node(state: State):

"""This node calls the LLM chain to generate a response."""

return {"messages": [chain.invoke({"messages": state["messages"]})]}

graph_builder = StateGraph(State)

graph_builder.add_node("chatbot", chatbot_node)

graph_builder.set_entry_point("chatbot")

graph_builder.set_finish_point("chatbot")

memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

Step 5: Create the CLI Chat Interface

Now connect everything to a terminal interface:

python``` import uuid

print("Hello! I'm your friendly science bot. Ask me anything! (Type 'quit' to exit)")

session_id = str(uuid.uuid4()) config = {"configurable": {"thread_id": session_id}}

while True: user_input = input("You: ") if user_input.lower() in ["quit", "exit", "q"]: print("Goodbye! Keep exploring! ✨") break

# The input to the graph is now just the single new message.

# The checkpointer handles loading the past messages.

input_message = {"messages": [("user", user_input)]}

# Stream the response, passing the config object as the second argument.

# This is how the graph knows which conversation to continue.

for chunk in graph.stream(input_message, config=config):

if "chatbot" in chunk:

# We get the full response content from the chunk

print(f"Science Bot: {chunk['chatbot']['messages'][-1].content}", end="", flush=True)

print() # Print a newline after the bot finishes responding

### **Run the Chatbot**

Ensure your folder structure looks like:

```python

your-project-folder/

├── .env

└── main.py

Run the bot

bash

python main.py

With that, you've built a personalized science chatbot that remembers past messages and speaks in a fun, kid-friendly tone. You've also learned the fundamentals of LangGraph's state, nodes, and memory-powered architecture—essential tools for building intelligent conversational agents.

Full Code:

# main.py

import os

from typing import Annotated

from typing_extensions import TypedDict

import uuid # Import uuid to generate unique thread IDs

from dotenv import load_dotenv

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_google_genai import ChatGoogleGenerativeAI

# Import the MemorySaver checkpointer <-- NEW

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph, START

from langgraph.graph.message import add_messages

# --- 1. Define the State (No changes here) ---

class State(TypedDict):

messages: Annotated[list, add_messages]

# --- 2. Set up the LLM and Prompt (No changes here) ---

load_dotenv()

llm = ChatGoogleGenerativeAI(model="gemini-1.5-flash")

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a friendly and fun science chatbot for kids. "

"Explain concepts simply, use fun analogies, and always be encouraging. "

"Keep your answers short and easy to understand.",

),

MessagesPlaceholder(variable_name="messages"),

]

)

chain = prompt | llm

# --- 3. Define the graph nodes (No changes here) ---

def chatbot_node(state: State):

"""This node calls the LLM chain to generate a response."""

return {"messages": [chain.invoke({"messages": state["messages"]})]}

# --- 4. Build the graph with a checkpointer ---

graph_builder = StateGraph(State)

graph_builder.add_node("chatbot", chatbot_node)

graph_builder.set_entry_point("chatbot")

graph_builder.set_finish_point("chatbot")

# The only change in this section is to ADD the checkpointer <-- NEW

memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

# --- 5. Run the chatbot CLI with memory ---

print("Hello! I'm your friendly science bot. Ask me anything! (Type 'quit' to exit)")

# Define a unique ID for our conversation thread.

# This ID tells the checkpointer which conversation history to load.

# We'll generate a random one each time the script runs for a new session.

session_id = str(uuid.uuid4())

config = {"configurable": {"thread_id": session_id}}

# We no longer need to manage conversation_history ourselves! <-- MAJOR CHANGE

while True:

user_input = input("You: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye! Keep exploring! ✨")

break

# The input to the graph is now just the single new message.

# The checkpointer handles loading the past messages.

input_message = {"messages": [("user", user_input)]}

# Stream the response, passing the config object as the second argument.

# This is how the graph knows which conversation to continue.

for chunk in graph.stream(input_message, config=config):

if "chatbot" in chunk:

# We get the full response content from the chunk

print(f"Science Bot: {chunk['chatbot']['messages'][-1].content}", end="", flush=True)

print() # Print a newline after the bot finishes responding

Conclusion

AI agents are evolving beyond simple chatbots, they now require statefulness, resilience, human collaboration, and deep context. These demands can't be met by prompt engineering alone.

LangGraph rises to this challenge with production-grade orchestration, durable execution, rich memory, and human-in-the-loop support, all while giving you full control over your agent's logic and behavior. It's built for teams that need reliable infrastructure without sacrificing flexibility.

With tools like LangSmith for observability and the LangGraph Platform for visual development and deployment, you're not just adopting a framework, you're entering a growing ecosystem designed for the future of intelligent agents.

Ready to Bring Production-Grade Agents to Life? Let’s Talk.

If this walkthrough sparked ideas for your own AI initiative—whether that’s an internal automation, a customer-facing assistant, or a fully-fledged product—we’d love to hear about it. At Appri AI, we turn proofs-of-concept into revenue-generating solutions by combining:

- Deep technical expertise in LangGraph, LangChain, custom LLM fine-tuning and secure cloud deployment.

- Proven consulting playbooks that take projects from scoping and rapid prototyping all the way through launch and ongoing optimisation.

- A partnership mindset that keeps your business goals, brand voice and compliance needs front-and-centre.

👉 Start the conversation

- Book a free 30-minute free strategy call – pick a slot that works for you, from here.

- Prefer email? Drop a quick note to [email protected] with a line or two about what you’re building.

Let’s co-create the next wave of intelligent, resilient, human-aware agents—tailored to your business.